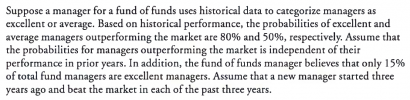

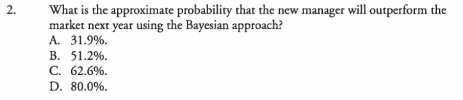

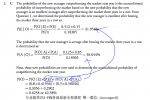

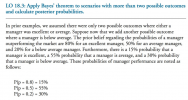

This explores the answer to Miller's sample question in Chapter 6 of Mathematics and Statistics for Financial Risk Management. There are three types of managers: Out-performers (MO), in-line performers (MI) and under-performers (MU). The prior probability that a manager is an outperformer is 20.0%. But if we observe two years of market beating performance, (aka, evidence), then what is the posterior (updated) probability that the manager is an outperfomer?

Here is David's XLS: https://trtl.bz/220122-bayes-three-states

Here is David's XLS: https://trtl.bz/220122-bayes-three-states

Last edited by a moderator:

.. Thank you!!

.. Thank you!!